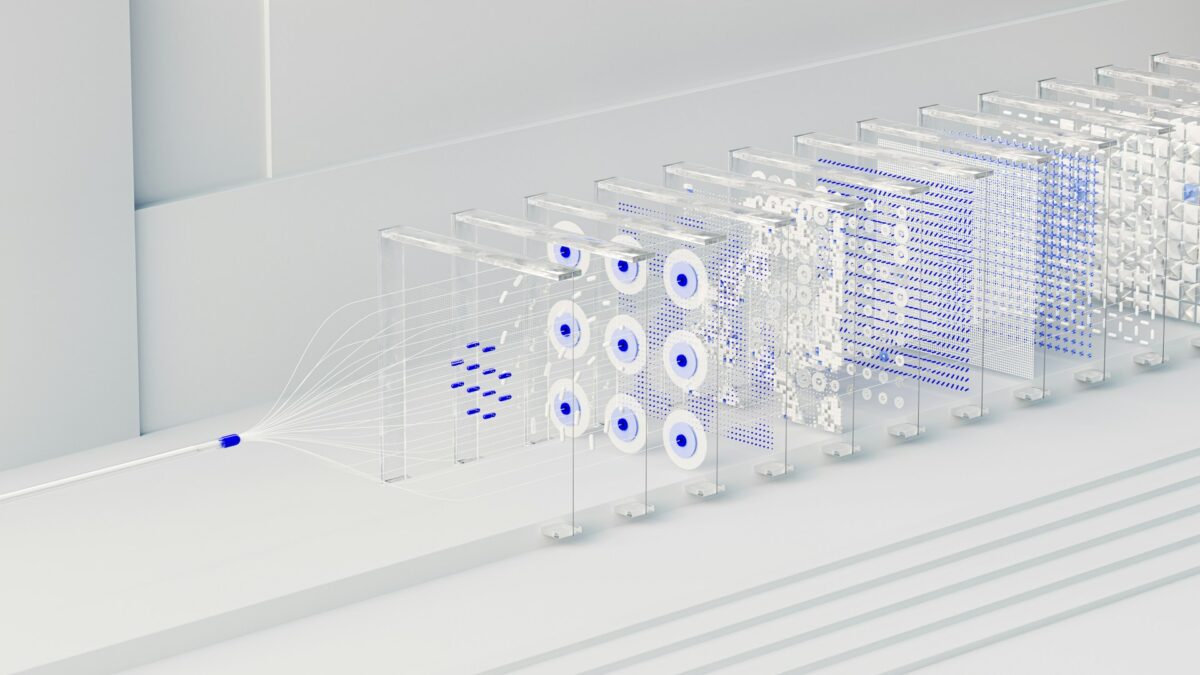

Maybe it’s your first time hearing the term “synthetic data.” What could it be? Synthetic data is like fuel for your ML models, made from non-organic materials.

Okay, jokes aside, what actually is synthetic data? It’s a little complex, but in simple terms, it’s artificially generated data that mimics real data. First, we tried to mimic human intelligence. Now, we’re trying to mimic the data that comes from humans.

But here’s why it’s actually useful:

Let’s say your data is sensitive and you don’t want to expose it just to improve your ML models. But you still need to improve them. So why not use synthetic data instead? It’s not real, but it’s close, and that improves privacy a bit, right?

It also helps when your data is scarce, imbalanced, or you want to augment your dataset for better generalization. Unlike random noise, synthetic data preserves real-world patterns and correlations, which makes it way more valuable.

Tools for Synthetic Data Generation

No need to pretend here, I don’t know any other tool besides the SDV library in Python. That’s why I’m using it for this article. I’m still new to the term synthetic data myself, but I believe this library offers a really simple solution.

The SDV library supports tabular data, including relational and time-series formats. It also offers modeling based on Gaussian Copulas, CTGANs, and more, so pretty much everything you’ll need is probably covered by this one library.

Generating Synthetic Data with SDV

I’ll assume you already have the SDV library installed on your machine, so I’ll skip that part.

Let’s start by loading a dataset with real data:

from sklearn.datasets import fetch_california_housing

import pandas as pd

data = fetch_california_housing(as_frame=True)

df = data.frame

df = df.sample(n=1000, random_state=42)It’s a simple dataset that we can use to test the SDV library. This dataset includes features like median income, house age, average rooms, population, and more.

from sdv.metadata.single_table import SingleTableMetadata

from sdv.single_table import CTGANSynthesizer

metadata = SingleTableMetadata()

metadata.detect_from_dataframe(df)

synthesizer = CTGANSynthesizer(metadata)

synthesizer.fit(df)Okay, now that we’re using the SDV library for the first time, I want to pause here and explain what the hell is going on.

CTGAN stands for Conditional Tabular GAN. If that term still sounds unfamiliar, it basically helps us generate realistic synthetic tabular data.

It works by training two neural networks simultaneously: a generator that tries to produce fake data indistinguishable from real data, and a discriminator that learns to tell real data from fake.

Metadata tells the synthesizer the data types and structure of each column (like numerical or categorical), so it knows how to model and generate realistic synthetic data matching your original dataset.

Cool concept, gotta agree. So basically, we create a model and train it with our real data to generate fake data that looks just like the real thing.

syn_df = synthesizer.sample(num_rows=1000)Now we have a synthetic copy of the dataset, so we can actually compare it with the real data later too.

print("Real:\n", df.describe())

print("Synthetic:\n", syn_df.describe())You can also visualize the real and synthetic datasets, it’s up to you.

Training Models and Comparing Their Performance

Let’s start by training a Random Forest regression model on real data first, and later we will do the same for synthetic data to compare the results.

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error

import numpy as np

X_real = df.drop('MedHouseVal', axis=1)

y_real = df['MedHouseVal']

X_train, X_test, y_train, y_test = train_test_split(X_real, y_real, test_size=0.3, random_state=42)

reg_real = RandomForestRegressor()

reg_real.fit(X_train, y_train)

predictions = reg_real.predict(X_test)

real_rmse = np.sqrt(mean_squared_error(y_test, predictions))And now it’s time to train a model with synthetic one:

X_syn = syn_df.drop('MedHouseVal', axis=1)

y_syn = syn_df['MedHouseVal']

reg_syn = RandomForestRegressor()

reg_syn.fit(X_syn, y_syn)

syn_predictions = reg_syn.predict(X_syn)

syn_rmse = np.sqrt(mean_squared_error(y_syn, syn_predictions))2 regression model first one trained by real data and second one trained by synthetic data let’s see their performance (for performance test we will use RMSE).

print(f"RMSE (Real): {real_rmse}")

print(f"RMSE (Synthetic): {syn_rmse}")

# RMSE (Real): 0.6667749768354848

# RMSE (Synthetic): 0.49211807503924404Getting close scores is expected, but here, our synthetic data performs better than the real data, which is unexpected. However, there are a few reasons why this might happen.

The synthetic data might closely match the distribution and patterns of the real data, which could explain the better performance. However, this is unlikely in most cases.

The real data model might have overfitted on the smaller dataset used for training, and this could be the reason for our result. Since we are only testing the SDV library, we won’t run the experiment for extended periods.

In some cases, the synthetic data can be too similar to the training data, which may lead to better training results but could negatively impact generalization to new, unseen data.

Conclusion

Synthetic data isn’t automatically safe and best alternative to real data. For it to really work, it needs to be statistically accurate, privacy-preserving (meaning it can’t be reverse-engineered), and used responsibly, especially when working in regulated areas. There are frameworks like PATE-GAN and Differential Privacy that tackle these concerns.

The perks of synthetic data? You avoid privacy issues, it’s perfect for prototyping and testing, and you can generate massive datasets in no time. But it’s not all sunshine and rainbows. Sometimes, synthetic data misses out on those tiny, important correlations, and it doesn’t always fit perfectly when you move it into real-world production. Oh, and there’s always the risk of overfitting the synthetic data generator.

11:11 – I want you to stop time.