Hehe, yes, I’m back! The guy who’s adding Rust to everything, because why not? It’s fun! I’m not saying what I do is always the best, but I do believe it’s worth giving a shot sometimes.

TensorFlow.js isn’t something I’ve tried for very long, and WASM… Okay, actually, I still haven’t used it much. But machine learning? Yeah, that’s something I do often!

And Rust? Well, Rust is just the concept I keep adding to everything, that’s kind of the point of this blog. So today, we’re going to build a simple regression model (don’t worry, it’s really straightforward, no extra ML knowledge needed) using TensorFlow.js with the WASM backend enabled. We’ll also bring in a Rust WASM module to speed up the data normalization process.

Yeah, the whole idea here is speed.

Why Not Just Use Python?

Hey, we don’t ask those kinds of questions here, but let me tell you anyway, lol.

Maybe you just don’t want a backend server?

And if you’re already in the browser, JavaScript and WebAssembly are your tools. TensorFlow.js gives you real ML capabilities on the client side. Rust, when compiled to WASM, lets you bring in serious performance for custom data preprocessing or number crunching, safely and fast.

Maybe you hate Python too???

Okay, those can be reasons why this article exists, but honestly, there’s no need for logic. This is just what I like and what I do.

If we’re talking speed:

- Model inference (GPU) is probably better with Python (thanks to CUDA),

but hey, if you really want performance, you can just go full Rust with native crates! - Custom CPU-heavy logic? Rust (via WASM) is fantastic for that.

- In-browser performance? Yeah, that’s where our approach shines.

So yeah, even if you just hate Python, that’s reason enough to try this. You don’t need a good reason, we’re here to have fun, not do business.

Rust WASM Module

mkdir rusty_tf

cd tensor_tf

cargo new --lib rust_normalizer

cd rust_normalizer[lib]

crate-type = ["cdylib"]

[dependencies]

wasm-bindgen = "0.2"Start with the main project folder and the main Rust library project folder. Then switch to the Rust folder and edit your Cargo.toml file.

use wasm_bindgen::prelude::*;

#[wasm_bindgen]

pub fn normalize(data: &[f32]) -> Vec<f32> {

let min = data.iter().cloned().fold(f32::INFINITY, f32::min);

let max = data.iter().cloned().fold(f32::NEG_INFINITY, f32::max);

data.iter()

.map(|x| (x - min) / (max - min))

.collect()

}Next, we create our normalize function in Rust. You can simply open and edit the lib.rs file for this.

First, we import wasm_bindgen to enable communication between Rust and JavaScript. The #[wasm_bindgen] attribute is added to the function to make it accessible from JavaScript.

This function takes a list of numbers, finds the minimum and maximum values, then scales each number to a range between 0 and 1 using the formula (x - min) / (max - min).

It returns a new list of normalized numbers, making data preprocessing fast and efficient right in the browser.

cargo install wasm-pack

wasm-pack build --target webFirst, install wasm-pack if you don’t have it already (sometimes I wonder why I even explain this, those commands so close to human language). After that, we build the project.

mkdir -p ../web/rust_wasm

cp pkg/*.wasm pkg/*.js ../web/rust_wasm/At the end, copy all .wasm and .js files from the pkg folder to /web/rust_wasm/ for a clean project structure.

Frontend Setup

npm init -y

npm install @tensorflow/tfjs @tensorflow/tfjs-backend-wasmGo to the web folder and initialize npm and install TensorFlow.js.

<!doctype html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Rusty TF</title>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@4.16.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-backend-wasm@4.16.0/dist/tf-backend-wasm.min.js"></script>

</head>

<body>

<h1>Rusty TF (Experiment)</h1>

<script type="module" src="./main.js"></script>

</body>

</html>The index.html file will simply serve our JavaScript in the browser. We also define some basic things like the language, character set, a title, and a heading.

import init, { normalize } from "./rust_wasm/rust_normalizer.js";

import * as tf from '@tensorflow/tfjs';

import '@tensorflow/tfjs-backend-wasm';

async function run() {

await tf.setBackend('wasm');

await tf.ready();

await init();

const data = [10, 20, 30, 40, 50];

const normalizedData = normalize(data);

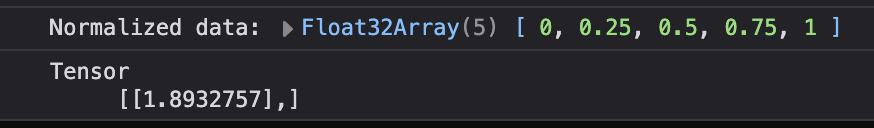

console.log("Normalized data:", normalizedData);

// Create simple regression model

const model = tf.sequential();

model.add(tf.layers.dense({ units: 1, inputShape: [1] }));

model.compile({ optimizer: 'sgd', loss: 'meanSquaredError' });

// Prepare training data

const xs = tf.tensor2d(normalizedData, [normalizedData.length, 1]);

const ys = tf.tensor2d(normalizedData.map(x => 2 * x + 1), [normalizedData.length, 1]);

await model.fit(xs, ys, { epochs: 100 });

const output = model.predict(tf.tensor2d([0.5], [1, 1]));

output.print();

}

run();And now here we are, our main JavaScript file. If you’ve ever worked with other ML libraries or maybe used TensorFlow in Python, this will feel pretty familiar.

We start by setting the backend to WASM using TensorFlow.js, which gives us better performance in the browser. Then we define a simple data array (you could also import this from a CSV file, or even preprocess it in Rust and read it in as JSON if you want things extra clean and fast).

Next, we create a basic model, just a single dense layer, and compile it using an optimizer and a loss function, which is pretty much standard in any ML workflow.

We then prepare our training data, normalize it using the Rust WASM module we built earlier, and feed it into the model. Finally, we run a prediction using a single input, not from the training dataset, just a new value to test how well the model learned.

Testing

npx serve .Run a local server inside your web folder, and then open the URL in your browser, you’ll see the output there.

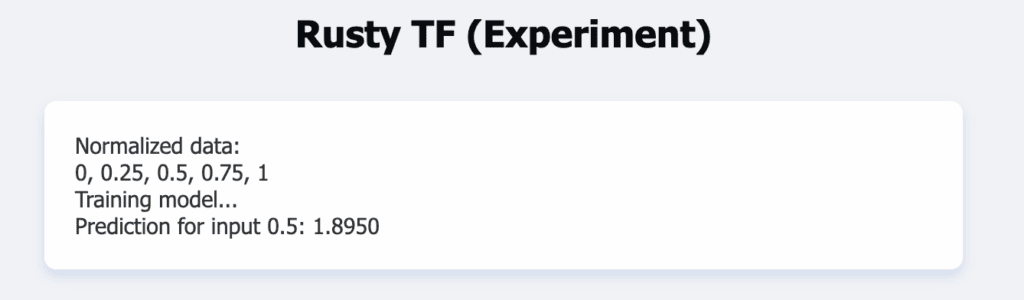

Works well, but I think we can make it better with a prettier UI. Let’s take this output and present it in a more visually appealing way.

The main reason is that it’s troublesome to open the inspector every time, I just want to see the output immediately when I open the URL, not because I want to make it pretty.

body {

font-family: 'Segoe UI', Tahoma, Geneva, Verdana, sans-serif;

max-width: 600px;

margin: 40px auto;

padding: 0 20px;

background: #f0f2f5;

color: #333;

}

h1 {

text-align: center;

color: #080C12;

}

#output {

margin-top: 30px;

background: #fff;

padding: 20px;

border-radius: 8px;

box-shadow: 0 4px 8px rgba(74, 144, 226, 0.2);

font-size: 1.2rem;

white-space: pre-wrap;

}<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Rusty TF</title>

<link rel="stylesheet" href="./styles.css" />

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@4.16.0/dist/tf.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs-backend-wasm@4.16.0/dist/tf-backend-wasm.min.js"></script>

</head>

<body>

<h1>Rusty TF (Experiment)</h1>

<div id="output">Running model...</div>

<script type="module" src="./main.js"></script>

</body>

</html>import init, { normalize } from "./rust_wasm/rust_normalizer.js";

async function run() {

const outputEl = document.getElementById('output');

try {

outputEl.textContent = "Loading WASM backend...";

await tf.setBackend('wasm');

await tf.ready();

outputEl.textContent = "Initializing Rust module...";

await init();

const data = [10, 20, 30, 40, 50];

const normalizedData = normalize(data);

outputEl.textContent = `Normalized data:\n${Array.from(normalizedData).join(', ')}`;

const model = tf.sequential();

model.add(tf.layers.dense({ units: 1, inputShape: [1] }));

model.compile({ optimizer: 'sgd', loss: 'meanSquaredError' });

const xs = tf.tensor2d(normalizedData, [normalizedData.length, 1]);

const ys = tf.tensor2d(normalizedData.map(x => 2 * x + 1), [normalizedData.length, 1]);

outputEl.textContent += "\nTraining model...";

await model.fit(xs, ys, { epochs: 100 });

const output = model.predict(tf.tensor2d([0.5], [1, 1]));

const prediction = output.dataSync()[0];

outputEl.textContent += `\nPrediction for input 0.5: ${prediction.toFixed(4)}`;

} catch (err) {

outputEl.textContent = `Error: ${err.message}`;

}

}

run();

We simply fetch the output div using const outputEl = document.getElementById('output') inside the JavaScript file. Then, we update its text at each step of the process. We also added some CSS to style it and make it look neat. Other than that, nothing else changed in main.js file, (I’m ignoring the try-catch part since that’s just for error handling no need talk about much).

Conclusion

Simple, pretty, everything runs in the browser, no Python, just JavaScript and Rust. I can say we’ve done this pretty well.

It was a fun project, but now that we’re at the conclusion, it’s time to talk about whether this approach is really worth trying or if you should just ignore it.

If you’re working on the client side and want to add some machine learning models to your product without involving a server process, this is definitely worth it, no need to say more, just dive in and learn it.

If you want to try something new and don’t want to sacrifice too much performance on the client side, it’s still worth it because the WASM backend and Rust modules are fast enough.

Of course, if we’re talking about general modeling and don’t need to work in the browser, this approach can be completely unnecessary and time-consuming. For that, Python or pure Rust libraries will be better suited, as expected (it’s a no-server approach after all, duh).

But it’s still fun! And yes, TensorFlow.js is a great choice for JavaScript developers who don’t want to use any other language for ML modeling. Plus, TensorFlow.js can also be used in Node.js, not just the browser! But since today I focused on the browser part, I didn’t cover that.

Back in the days, poor old me.